Dealing with Model Concept Drift in Networks with LEAF

LEAF: Navigating Concept Drift in Cellular Networks

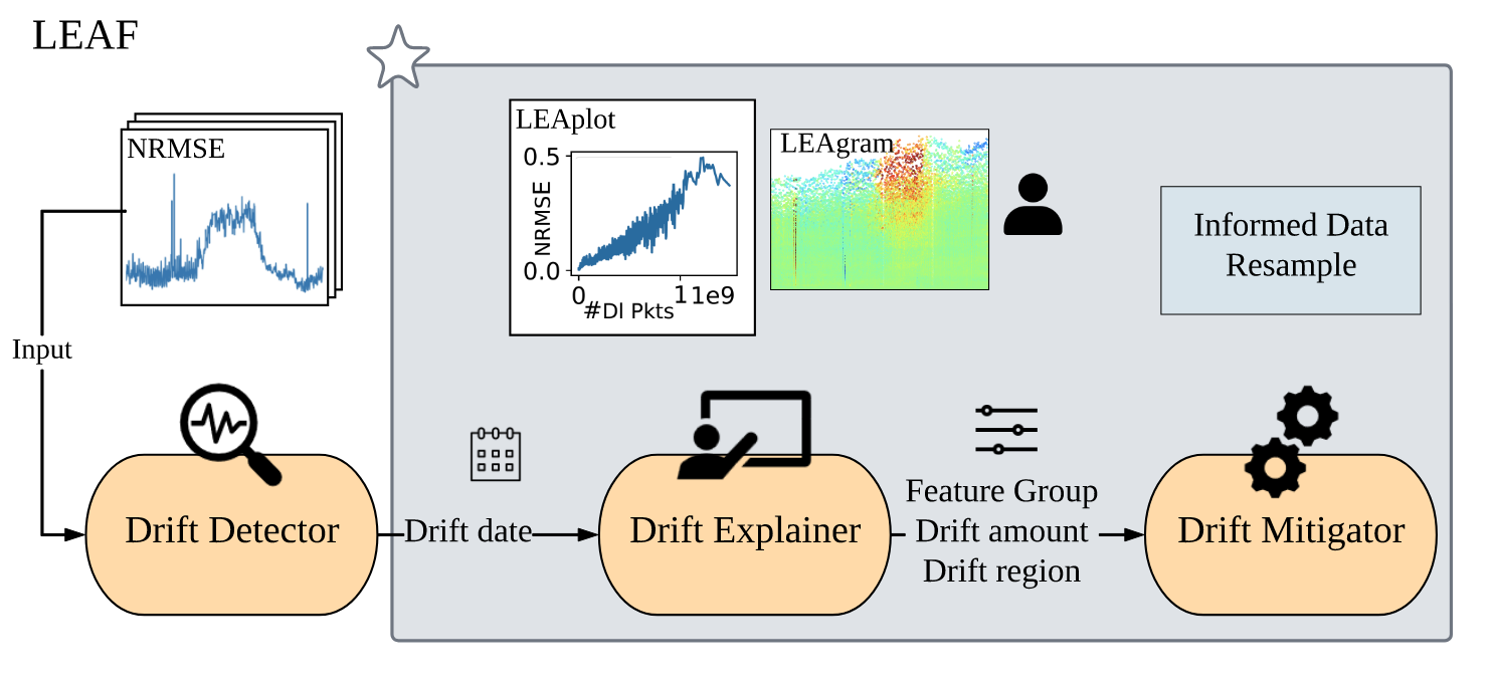

Abstract. Operational networks commonly rely on machine learning models for many tasks, including detecting anomalies, inferring application performance, and forecasting demand. Yet, model accuracy can degrade due to concept drift, where either the relationships between features and the target to be predicted, or the features themselves change. Mitigating concept drift is an essential part of operationalizing machine learning models in general, but is of particular importance in networking’s highly dynamic deployment environments. In this paper, we first characterize concept drift in a large cellular network for a major metropolitan area in the United States. We find that concept drift occurs across many important key performance indicators (KPIs), independently of the model, training set size, and time interval—thus necessitating practical approaches to detect, explain, and mitigate it. We then show that frequent model retraining with newly available data is not sufficient to mitigate concept drift, and can even degrade model accuracy further. Finally, we develop a new methodology for concept drift mitigation, Local Error Approximation of Features (LEAF). LEAF works by detecting drift; explaining the features and time intervals that contribute the most to drift; and mitigating it using forgetting and over-sampling. We evaluate LEAF against industry-standard mitigation approaches (notably, periodic retraining) with more than four years of cellular KPI data. Our tests with a major cellular provider in the US show that LEAF consistently outperforms periodic and triggered re-training on complex, real-world data while reducing costly retraining operations.

The Challenge of Concept Drift

Machine learning models have become essential tools for cellular network operators, helping them monitor network performance, detect anomalies, and forecast demand. However, these models face a critical challenge: concept drift, where their accuracy degrades over time as network conditions and usage patterns change. When deploying ML models in cellular networks, concept drift occurs when either:

- The relationships between input features and target predictions change

- The features themselves evolve over time

This drift is particularly problematic in cellular networks due to factors like:

- Dynamic signal interference from environmental changes

- Seasonal variations in usage patterns

- Network infrastructure updates

- Sudden shifts in behavior (like those seen during COVID-19)

Traditional approaches to handling drift often rely on regularly retraining models with new data. However, the researchers found this can be inefficient and even harmful to model performance in some cases.

How LEAF Works

LEAF takes a three-pronged approach to managing concept drift:

-

Detection: Uses statistical methods to identify when drift is occurring

- Explanation: Helps operators understand why drift is happening by:

- Identifying which features contribute most to the drift

- Visualizing error patterns through tools like LEAplot and LEAgram

- Mapping errors to specific network regions and time periods

- Mitigation: Strategically updates models by:

- Selectively “forgetting” less relevant historical data

- Over-sampling from important regions of the feature space

- Creating temporal ensembles to handle different drift patterns

Results

The researchers tested LEAF on over four years of cellular network data from a major US metropolitan area. Compared to traditional approaches, LEAF achieved:

- Better model accuracy while requiring up to 76.9% fewer model retrains

- Consistent performance improvements across different types of key performance indicators (KPIs)

- Effective drift management in both urban and suburban environments

- Clear explanations of drift causes that operators could act upon

Why This Matters

LEAF represents a significant advance in making machine learning more practical for cellular network operations by:

- Reducing the operational overhead of maintaining ML models

- Providing transparency into why and how models degrade

- Offering an automated way to maintain model accuracy over time

- Adapting to both gradual changes and sudden shifts in network conditions

As 5G networks continue to roll out and network complexity increases, tools like LEAF will become increasingly important for maintaining reliable ML-based network management systems.

Looking Forward

While LEAF was developed for cellular networks, its approach could potentially be applied to other domains facing similar drift challenges. The researchers have released a subset of their dataset to encourage further work in this area.

The framework highlights the importance of not just detecting drift, but understanding and explaining it to create more robust ML systems. As machine learning becomes more deeply embedded in critical infrastructure, approaches like LEAF that combine performance with explainability will be essential.

Resources

Access the released dataset accompanying the paper at this page: LEAF Dataset

Bibtex Citation

@article{liu2023leaf,

title={LEAF: Navigating Concept Drift in Cellular Networks},

author={Liu, Shinan and Bronzino, Francesco and Schmitt, Paul and Nitin Bhagoji, Arjun and Feamster, Nick and Crespo, Hector Garcia and Coyle, Timothy and Ward, Brian},

journal={Proceedings of the ACM on Networking},

year={2023}

}

Enjoy Reading This Article?

Here are some more articles you might like to read next: